ist346-content

Lab G - Scalability

Learning Objectives

In this lab you will:

- Learn about horizontal scalability.

- Understand how a load balancer works.

- Learn to consolidate logging in a horizontally scaled environment.

Lab Setup At A Glance

In this lab we will demonstrate how applications are scaled horizontally. Specifically we will run a web application built using the Flask Web Framework through a load balancer. We will use the very versatile Nginx as a load balancer. It is a very fast, reliable load balancer which comes with a variety of configurable algorithms for balancing load across servers on a network. We will spin up multiple instances of the same Flask web application behind the load balancer in so that we can get a clear picture of how traffic is distributed by a load-balancing application like Nginx.

+----LOAD BALANCER---+ +---CLIENT:BROWSER----+ ++++++++++++++

| Docker: nginx |---| host computer |---| Internet |

+------|http://nginx:80 | | http://localhost:80 | ++++++++++++++

| +--------------------+ +---------------------+

|

+----SERVER:Flask----+

| Docker: webapp |--+

| http://webapp:8080 | |

+--------------------+ |

| 1 or more instances|

+--------------------+

Before you begin

Prep your lab environment.

- Open the PowerShell Prompt

- Change the working directory folder to

ist346-labs

PS > cd ist346-labs - IMPORTANT: This lab requires access to Docker’s internals, you must enter this command:

PS ist346-labs> $Env:COMPOSE_CONVERT_WINDOWS_PATHS=1 - Update your git repository to the latest version:

PS ist346-labs> git pull origin master - Change the working directory to the

lab-Gfolder:

PS ist346-labs> cd lab-G - Start the lab environment in Docker. This version is just a single instance of the web app:

PS ist346-labs\lab-G> docker-compose -f one.yml up -d - Verify your

nginxload balancer and three instances of yourwebappare up and running:PS ist346-labs\lab-G> docker-compose -f one.yml ps

You should seenginxhas tcp port80. Thewebappis running on tcp port8080, but only exposes itself to the proxy. - Open a web browser like Chrome on your host. Enter the address

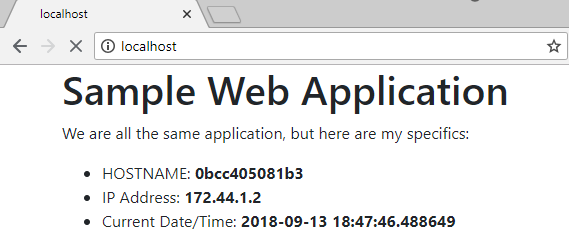

http://localhost:80and you will see the sample web application:

NOTE: The webpage is designed to reload every 3 seconds so that you can see what happens on subsequent HTTP requests. No need to hit the refresh button in your browser!

Understanding the Sample Web Applications

There’s some really important information page of the Sample Web Application. It’s designed to help you understand the behavior of the load balancer.

- HOSTNAME This indicates the host name of the running

webappdocker container which served the page. Currently on page refresh we get the same host name each time. That’s because there is only onewebappcontainer running on the backend of the load balancer. - IP Address This displays the IP Address of the docker container which served the page. Again you will see the same IP address on each page refresh because we only have one running instance of the

webappcontainer. - Current Date/Time This displays the current date/time on the server. This information should change with each request. Its primary purpose is to help you see that new content is being loaded with each request.

Scaling the app

Let’s scale our app to 3 instances and then observe what happens.

- To scale the

webappservice so there are 3 instances (instead of 1), we must bring down the current application:PS ist346-labs\lab-G> docker-compose -f one.yml down - Then we bring up a different configuration that supports load-balancing, type:

PS ist346-labs\lab-G> docker-compose -f roundrobin.yml up -d --scale webapp=3 - Go back to your browser running the Statistics Report and click the

Refresh Nowlink. You should now see 3 instances of thelab-g-_webappcontainer. We have scaled the app. - Go back to your browser running the Sample Web Application on

http://localhost:80. Notice now when the page refreshes, on each request you get one of three different HOSTNAMEs and IP Addresses. - The load balancer cycles through each of the 3 docker containers in a predictable pattern. That’s because the load balancer is configured to distribute the load using the

roundrobinalgorithm. You can learn more about it here: https://en.wikipedia.org/wiki/Round-robin_scheduling.

Turning it up to 11

If your app is designed to scale horizontally, then you should be able to scale it infinitely. Large Hadoop clusters, a system designed to scale horizontally, have thousands of nodes. The problem, of course, is writing an app to scale horizontally is non a trivial task. The biggest issue around making data available to each node participating on the back end and dealing with updates to that data.

Different Load Balancing Algorithms

As we explained in the previous section the default load balancing algorithm uses round robin. Let’s explore two other load balancing algorithms leastconn and ip_hash.

leastconn

The leastconn algorithm selects the instance with the least number of connections. If a node is busy serving a client, the next request will not use that node but instead select another available node. Let’s demonstrate this.

- First bring down your existing roundrobin setup, type:

PS ist346-labs\lab-G> docker-compose -f roundrobin.yml down - Then start the environment using the leastconn configuration:

PS ist346-labs\lab-G> docker-compose -f leastconn.yml up -d --scale webapp=3 - Now let’s return to the browser where we have Sample Web Application running on

http://localhost:80at first glance, it seems to work the same as before, rotating evenly through each instance. - Let’s put that to the test. We are going to access another url which keeps the instance busy so that the other browser cannot use that connection. Open another browser in a new window (

CTRL+ndoes this). And arrange the windows so you can see both at the same time. In the new window let’s request the Sample Web Application but instead use this url:http://localhost/slow/10 - You’ll see the browser accessing

http://localhostnow only uses ONE or TWO of the three instances. The other instance is busy fulfilling thehttp://localhost/slow/10request! When that request finishes, the other browser will once again use all three instances to fulfill requests.

uri hash

The uri algorithm selects an instance based on a hash of the Uri (Uniform Resource Indicator). This algorithm differs from the leastconn or roundrobin, in that a given Uri will always map to the same instance. Let’s see a demo

- First bring down your existing least conn environment, type:

PS ist346-labs\lab-G> docker-compose -f leastconn.yml down - Then start the environment using the hash configuration:

PS ist346-labs\lab-G> docker-compose -f hash.yml up -d --scale webapp=3 - Now let’s return to the browser where we have Sample Web Application running on

http://localhostnotice how with every page load we get the same instance. This is because that Uri maps to the instance you see. - Let’s try another Uri in the browser, type

http://localhost/ayou will get a different instance. If you re-load the page (you must do this manually) you will still get the same instance for thisuri. - Let’s try a 3rd Uri in the browser, type

http://localhost/byou will get yet another different instance. If you re-load the page (you must do this manually) you will still get the same instance for thisuri. - Going back to either

http://localhost,http://localhost/a, orhttp://localhost/bwill always yield a response from the same instance. That’s how uri mapping works!

There are other algorithms which in this manner. Consider their applications. Imagine distributing load based on geographical location, browser type, operating system, user attributes, etc… This offers a greater degree of flexibility for how we balance load.

Tear down

This concludes our lab. Time for a tear down!

- From the PowerShell prompt, type:

PS ist346-labs\lab-G> docker-compose -f hash.yml down

to tear down the docker containers used in this lab.

Questions

- How is horizontal scalability as demonstrated in this lab different from the vertical scalability of the previous lab?

- What do we call multiple copies of the service we scale horizontally?

- What are the two things Nginx does as explained in this lab?

- Why is scaling out to more nodes/instances easier than scaling back to fewer nodes/instances?

- Type the docker compose command to scale the service

myserviceto7instances. - How does the

leastconnalgorithm differ from theroundrobinalgorithm? How are they similar? - What are some potential uses of the

uriload balancer algorithm? - In spite of being load balanced, does our environment still have a single point of failure? If so, what is it? Do you have thoughts as to how can this be remedied?